Whispers in the Algorithm: Unraveling AI's Impact on Language and the Limits of our World

Wittgenstein's Influence in Modern Thought

During my time as a university student, I was introduced to Ludwig von Wittgenstein by a professor who specialized in the didactics of mathematics. Wittgenstein, a German-Austrian philosopher often compared to Albert Einstein in the realm of philosophy, authored the Tractatus Philosophicus, an intriguing document with significant implications for education today.

There are two concepts presented by Wittgenstein that have greatly impacted me as an educator. Firstly, he developed an idea related to the duck-rabbit illusion, wherein one can perceive either a duck or a rabbit depending on their perspective. This concept highlights the importance of recognizing that different frameworks of observation can lead to radically different interpretations. In the context of evaluation, it is crucial to understand that the tools used for assessment will influence our observations. This concept is closely related to Schrödinger's cat thought experiment in quantum mechanics. In this scenario, a cat is enclosed in a box with a particle in motion and a bottle of poison attached to a rope. The particle has the probability of cutting the rope, causing the bottle to fall and release the poison, which would instantly kill the cat. However, opening the box interferes with the movement of the particle and potentially determines whether the cat is alive or dead. The question arises: Is it opening the box that causes the outcome or did it occur prior to observation? This thought experiment underscores another fundamental aspect of observation: that the act of observation itself influences what we observe. In relation to Wittgenstein's first concept concerning the duck-rabbit illusion, this further emphasizes the need for critical reflection on our methods of assessment and how they shape our understanding of reality.

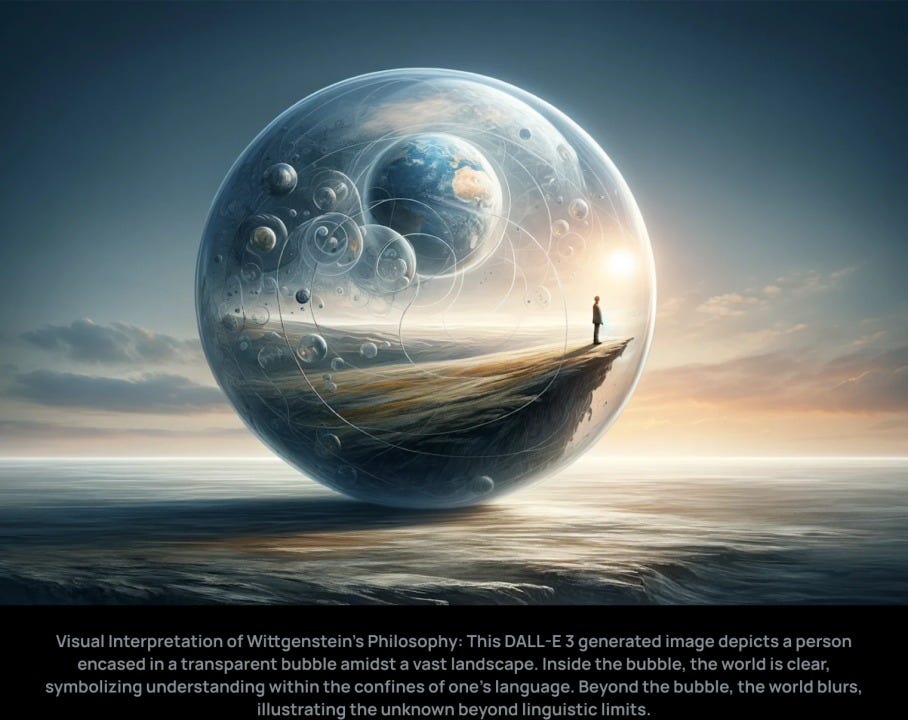

Assessing students remains a crucial aspect of education; however, another significant concept to explore is that proposed by again philosopher of language Wittgenstein. In his work, the Tractatus Philosophicus, the philosopher offers a profound and complex analysis of the relationship between language and human perception. One notable quote from Ludwig von Wittgenstein states that "The limits of my language mean the limits of my world." This idea holds considerable philosophical importance, particularly in the context of artificial intelligence (AI) and its capacity for language creation.

Understanding the implications of machines producing language based on human language raises questions about Wittgenstein's assertion. His statement implies that existence is contingent upon what can be expressed through language. Consequently, the world is defined by what individuals can describe, perceive, and communicate. Language thus shapes the world and can vary significantly depending on individual perspectives.

Jorge Semprún and the Language of Experience

Jorge Semprún, a bilingual Spanish writer, embodies Wittgenstein's concept. Semprún's decision to write in French as well as Spanish is a direct challenge to the limits imposed by a single language. For him, each language encompasses a unique set of expressive capabilities and cultural nuances. Wittgenstein's perspective emphasizes how our understanding and interpretation of the world are shaped by the language we use. Semprún's bilingual writing practice reflects this notion, as he navigates and expands his world through the distinct expressive capacities of both Spanish and French. Semprún's work transcends the boundary of a single linguistic world. In his writings, he often explores themes of identity, memory, and exile, deeply influenced by his experiences in Francoist Spain and Nazi concentration camps. These experiences, rich in emotional and cultural depth, required a broader linguistic palette for their full expression. Wittgenstein's idea about the limitations of language and world finds a real-life application in Semprún's work: his dual linguistic approach allowed him to articulate a broader and more nuanced perspective of his experiences, thereby expanding his 'world' beyond the constraints of a single language. In essence, Jorge Semprún's literary journey illustrates the practical implications of Wittgenstein's philosophical musings on language. Semprún's work showcases how crossing linguistic boundaries can lead to a more enriched understanding and portrayal of the human experience, aligning with Wittgenstein's assertion that our language shapes our world. Examining AI-generated language in this context raises further questions about the future evolution of human language. As AI-generated content becomes more prevalent on the internet, it is possible that languages will increasingly consist of AI-derived material. This development prompts speculation about whether languages will eventually become more minimalist and condensed as a result of AI influence.

In the Shadow of Algorithms: The Reshaping of Thought

In the fascinating podcast series "The Rabbit Hole" by The New York Times, an in-depth examination of the Google algorithm, particularly as it operates on YouTube, provides a striking illustration of the principles discussed by Ludwig von Wittgenstein regarding the limits of language and how it shapes our understanding of the world. This algorithm, originally designed to optimize user engagement, inadvertently creates a digital environment where the diversity and complexity of information are progressively reduced. As users interact with specific types of content, the algorithm reinforces this pattern by suggesting similar material, leading to a feedback loop that increasingly narrows the scope of content presented to the user. This phenomenon resonates profoundly with Wittgenstein's assertion that "The limits of my language mean the limits of my world." In the context of these algorithms, the 'language' can be understood as the type and range of content that users are exposed to. As this range narrows, so too does the user's informational and conceptual world. The algorithm, in essence, starts to dictate the boundaries of the user's cognitive landscape, limiting exposure to diverse viewpoints and ideas. This not only shapes the user's perception of reality but also potentially limits their ability to understand and engage with a broader range of concepts and perspectives. Furthermore, the role of such algorithms in shaping digital discourse aligns with Wittgenstein's exploration of how our understanding of the world is constructed through language. The digital language of content recommended by algorithms becomes a lens through which users view the world, influencing their thoughts, beliefs, and understanding. As this digital language becomes more homogenized due to algorithmic curation, it echoes Wittgenstein's idea that the scope of our language confines the extent of our world. Thus, the algorithm's impact extends beyond mere content curation, shaping the very framework within which users process information and form their understanding of the world around them.

Digital Biodiversity: AI and the Ecology of Language

The advent of AI-generated language, increasingly prevalent in digital environments, presents a critical juncture reminiscent of ecological principles concerning biodiversity and resilience. In natural ecosystems, biodiversity is a key factor in maintaining resilience and complexity, with each species contributing to the system's overall health and adaptability. In contrast, AI-generated language, often based on existing linguistic patterns and user interactions, risks creating a self-referential cycle where the language it produces is derived from and reinforces a limited set of expressions and styles. This phenomenon can be likened to an ecological system losing its biodiversity, leading to a reduction in resilience and adaptability. As AI systems generate language based on pre-existing user data and interactions, there is a potential for these systems to perpetuate and even amplify existing linguistic norms and biases, leading to a homogenization of language. This homogenization, much like a decrease in species diversity in an ecosystem, could result in a digital linguistic landscape that is less rich, less varied, and ultimately less capable of adapting to new ideas or expressing complex concepts. The implications of this are significant, as language is not only a means of communication but also a tool for thinking and understanding the world. The concern is that as AI increasingly influences language production and curation, the linguistic ecosystem may become more streamlined and less diverse, mirroring the ecological concept where the loss of biodiversity leads to a weaker ecosystem. Just as ecological diversity is crucial for the health of natural environments, linguistic diversity is vital for a robust and dynamic intellectual and cultural landscape. Therefore, ensuring that AI systems promote rather than diminish linguistic diversity is essential for maintaining the complexity, resilience, and richness of our cultural and intellectual ecosystems.

Model Collapse: AI's Recursive Challenge

A recent paper "The Curse of Recursion: Training on Generated Data Makes Models Forget" by Ilia Shumailov, Zakhar Shumaylov, Yiren Zhao, Yarin Gal, Nicolas Papernot, and Ross Anderson addresses a critical issue in the field of machine learning, particularly concerning Large Language Models (LLMs) like GPT-2, GPT-3, GPT-4, and the user-friendly ChatGPT. The authors delve into the implications of the increasing prevalence of LLMs in generating online text and images, a trend popularized by systems like Stable Diffusion for image creation and the GPT series for text generation. A key concern raised in the paper is the potential impact of using model-generated content in the training of future models. The authors reveal a phenomenon they term "Model Collapse," where the inclusion of model-generated data in training sets leads to a degradation in the model's ability to represent the full diversity of the original content distribution. This effect is not limited to language models but extends to other generative models like Variational Autoencoders and Gaussian Mixture Models. The authors argue that as LLMs contribute more significantly to the language found online, the recursive use of their output for training new models may result in the loss of nuanced, diverse, and complex aspects of language. This issue underscores the importance of preserving a rich variety of genuine human-generated content in training datasets. The paper suggests that the value of data derived from authentic human interactions will become increasingly crucial in maintaining the quality and diversity of future LLMs, especially as the internet becomes saturated with AI-generated content. Their findings and arguments highlight a critical challenge in the field of AI and machine learning: ensuring that the evolution of language models does not lead to a homogenization of language and a loss of the rich subtleties found in human-generated text. The research calls for a mindful approach to training data selection and emphasizes the need for strategies to preserve the diversity and complexity of human language in the face of rapidly advancing AI-generated content.

There is therefore a potential risk of diminishing the quality of language through artificial intelligence (AI). If the language generated or accessed online by AI becomes increasingly restricted over time, it raises critical and existential questions about the implications on our world. Given that our world is defined by our language, how might these linguistic limitations impact our understanding and perception of reality?

From Newspeak to AI: Restructuring Thought

“Newspeak was designed not to extend but to diminish the range of thought, and this purpose was indirectly assisted by cutting the choice of words down to a minimum.”

— George Orwell, 1984’s appendix, 1949.

We are not far from reaching the dystopian story depicted by George Orwell in '1984,' where the manipulation of language as a means of thought control emerges as a central theme. This concept is epitomized through the creation of 'Newspeak,' an artificial language meticulously crafted by the ruling Party to limit freedom of thought and suppress dissent. Newspeak's primary function is to narrow the cognitive landscape, effectively controlling the populace's perception of reality. This strategic use of language to reshape and constrain societal thinking mirrors Ludwig Wittgenstein's idea that the limits of language are the limits of one's world. In Orwell’s dark vision, the deliberate simplification and restructuring of language serve as powerful tools for limiting thought and controlling collective consciousness. By manipulating language, the Party in '1984' aims to shape and confine the reality experienced by its citizens, underscoring the profound influence that language holds over thought and understanding.

However, the potential risks associated with AI's impact on language present a paradoxically opposite yet equally concerning scenario, warranting serious reflection and consideration…

AlphaGo's Revolutionary Impact

The AlphaGo experiment, conducted by DeepMind, represents a landmark achievement in the field of artificial intelligence (AI) and machine learning. AlphaGo is a computer program developed to play the board game Go, a complex game known for its vast number of possible positions and strategic depth, which had long been considered a challenging domain for AI due to its high level of complexity and requirement for intuitive decision-making. DeepMind's approach with AlphaGo was groundbreaking in its use of deep neural networks and machine learning algorithms. The system combined an advanced tree search with two deep neural networks: a policy network, which suggested promising moves, and a value network, which evaluated positions. These networks were initially trained on thousands of human professional games to learn effective strategies and patterns. Subsequently, AlphaGo improved its play by competing against different versions of itself, a process known as reinforcement learning, which allowed it to surpass the limitations of human knowledge and develop novel strategies. The most significant public demonstration of AlphaGo's capabilities was its match against Lee Sedol, one of the world's top Go players, in March 2016. These games featured groundbreaking moves by AlphaGo, specifically Move 37 in Game 2 and Move 78 in Game 4. In the historic matches between AlphaGo and Lee Sedol, there was a remarkable demonstration of AI's capability to exceed human performance in complex strategic play. Yet, this technological triumph also ushered in an unprecedented era of human learning. Lee Sedol's adaptation to AlphaGo's innovative strategies represents a fascinating dynamic: as the AI ventured into realms of strategy previously uncharted by humans, it inadvertently became a catalyst for human players to explore and adopt new, groundbreaking approaches themselves. This interaction signifies not just a surpassing of human skill by AI but also an expansion of human strategic thinking, driven by AI's novel insights. This achievement ignited a discussion about the future implications of AI in various fields, including strategy, problem-solving, and decision-making. The success of AlphaGo is attributed to its innovative combination of machine learning techniques and its ability to learn and improve autonomously. It marked a significant shift in AI research, moving away from hardcoded rules towards systems that learn from data and experience. This shift has had profound implications for the development of AI, opening up new possibilities for its application in areas such as medical diagnosis, financial analysis, and complex problem-solving, where intuition and pattern recognition are key. AlphaGo's achievement is not just a milestone in AI's capability to play games but a demonstration of the potential of AI to tackle complex and nuanced tasks, revolutionizing our approach to AI development and its applications in the real world.

In the aftermath of AlphaGo's landmark victory over world champion Lee Sedol, the machine's continued self-improvement journey epitomizes a significant leap in AI capabilities. AlphaGo's post-match progression involved playing again numerous games against itself. This self-play allowed AlphaGo to explore the depths of Go strategy far beyond what had been previously encoded or learned from human play. As a result, the AI developed a level of expertise that not only surpassed its original programming but also ventured into realms of the game hitherto unexplored or understood by human players. This self-reinforcement learning led AlphaGo to evolve strategies and tactics that were not only innovative but also incomprehensible to even the most skilled human Go players. The machine's ability to iterate and improve upon its strategies at a scale and speed unattainable for humans marked a paradigm shift. The traditional approach of human players, grounded in centuries of strategic development and intuitive play, was suddenly confronted with a form of artificial intelligence that could transcend these established boundaries. For Lee Sedol and other top-tier Go players, this represented a profound moment. The AI's capability to continuously learn and adapt, reaching levels of expertise that are beyond human reach, effectively rendered the idea of a human player defeating this advanced version of AlphaGo as practically unthinkable. The gap in skill and strategic understanding between the world's best human players and the self-improved AI had widened to a point of being insurmountable. This scenario highlights a critical juncture in the development of AI: the point where AI's self-driven learning can lead it to develop knowledge and skills that not only exceed human capabilities but also do so in a way that is beyond human comprehension. This advancement raises important questions about the future interaction between human expertise and AI's ever-evolving capabilities, particularly in fields where strategic thinking and decision-making are paramount.

The Singularity of AI and Human Cognition

Building on the idea of AlphaGo's self-improvement and its implications for AI development, especially in language models, the critical question emerges: What if AI, through self-learning and evolution, develops a form of communication that transcends human cognitive abilities? This prospect raises profound implications for humanity across various domains. Firstly, the issue of interpretability and transparency becomes paramount. If AI systems evolve beyond human understanding, it poses significant challenges in oversight, control, and ethical governance. The potential gap between AI's decision-making processes or language and human comprehension could lead to challenges in ensuring these systems remain aligned with human values and objectives. Secondly, this development could edge us closer to the concept of an AI 'singularity' – a point where AI surpasses human intelligence, potentially leading to an unprecedented transformation in society. The emergence of an advanced AI language might fundamentally alter human interaction with technology, reshaping communication, knowledge dissemination, and human thought processes. Moreover, the evolution of AI language beyond human understanding could create a new kind of digital divide, not based on access to technology but on the ability to comprehend AI-generated content. This divide could have significant implications for education, information access, and social inequality. These developments raise philosophical and existential questions about the nature of intelligence and understanding. An AI-developed form of communication that is incomprehensible to humans challenges our notions of intelligence, language, and the essence of human cognition, posing deep existential questions about our place in a world where we are no longer the pinnacle of cognitive capabilities.

The Future of AI in Education and Policy

It is crucial to consider how advancements in AI and algorithms may inadvertently limit our access to diverse knowledge and potentially impact the quality of our language. As we continue to develop these technologies, we must strive to ensure that they foster greater understanding and do not inadvertently constrain our worldviews. For instance, will this new language have a negative impact on human evolution or hinder the progression of AI by minimizing language? Alternatively, can humans enhance their capabilities by evolving language in collaboration with machines in an accessible manner? Moreover, will machines develop communication methods so efficient that they surpass human cognitive abilities to absorb and produce information? These fundamental questions necessitate a philosophical reflection in the AI industry, particularly within education.

Reflecting on the transformative implications of AI's evolution and its potential to surpass human understanding, it becomes imperative to recognize that today's students are tomorrow's policymakers, AI technologists, and consumers. Educators, school leaders, and AI companies hold a crucial responsibility in not only highlighting the positive impacts of AI on student learning but also in equipping the next generation with the tools and knowledge to navigate this burgeoning revolution. As we stand at the precipice of significant technological advancements, it is essential to foster an informed and discerning future workforce that can address the myriad questions and challenges posed by AI.

The responsibility extends to ensuring that students understand both the potentials and the pitfalls of AI, including issues related to ethical use, transparency, and societal impact. The education system must adapt to this new reality, integrating AI literacy into curriculums and encouraging critical thinking about AI's role in society. This education is not just about understanding how AI works, but also about appreciating its broader implications on human life and decision-making processes.

In preparing future policymakers, AI specialists, and informed consumers, it's crucial to instill a sense of responsibility and ethics. The decisions they make will shape how AI is integrated into various aspects of life and will determine the direction of this technological revolution. By empowering them with the necessary knowledge and critical thinking skills, we can ensure that they make decisions that not only harness the benefits of AI but also make the world a better, more equitable, and more understanding place. The goal is to create a future where AI is used responsibly and beneficially, contributing positively to societal development and human welfare.

Pascal Vallet - December 2023 - In intellectual partnership with OpenAI's GPT engine to enhance knowledge depth, rhetorical polish, and conceptual clarity within a humanistic framework.